Adding a Camera

These days you can find cameras just about everywhere - on our cars, in our houses, and of course all over our phones. But what about robots? Getting robots to see and understand the world like we do is one of the biggest areas of research in robotics, and today we’re going to look at the first half of that pipeline - getting data from a camera into our robot. By the end of this tutorial we’ll be able to connect a camera to our own robot and have it beam an image back. Like the last tutorial, on lidar, this will be into four main sections and you can use these links to jump down:

How cameras and images work

While it’s exciting to get a camera working in ROS for the first time and it’s tempting to rush ahead, things will make a lot more sense if we go through some theory first, especially if you’re not familiar with this stuff. This is a massive topic that some people do an entire university degree on, so the notes here are really just a bird's-eye-view.

Types of cameras

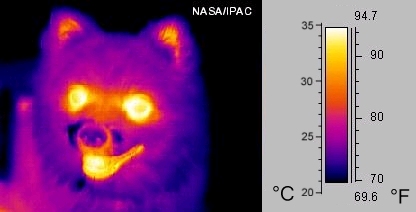

When you think about a camera, there’s probably something that first jumps into your head. Cameras look at the world directly in front of them and turn that into a 2D grid of coloured pixels. Although this is the type of camera we'll mostly be using for this tutorial, it’s worth acknowledging the huge variety of cameras that are out there. Just a few of the ways that cameras can vary are:

- Sensor type (e.g. colour, monochrome/greyscale, thermal, IR)

- Optics (e.g. fisheye lens, wide FOV, 360deg, focal lengths)

- Frame rate (e.g. high-speed cameras)

Most of the concepts covered in this tutorial will in some way apply to all of these.

One particular variety of camera is the depth camera which will be covered in greater depth (pun intended) in the next tutorial, but make sure to read this one first as it will be used as a foundation.

Capturing & Storing images

When a camera takes an image, the light that is bouncing around the world passes through a lens and an aperture, and then onto a sensor. This data is recorded and stored as pixels. This is a 2D array of measurements of the intensity of light at that point on the sensor.

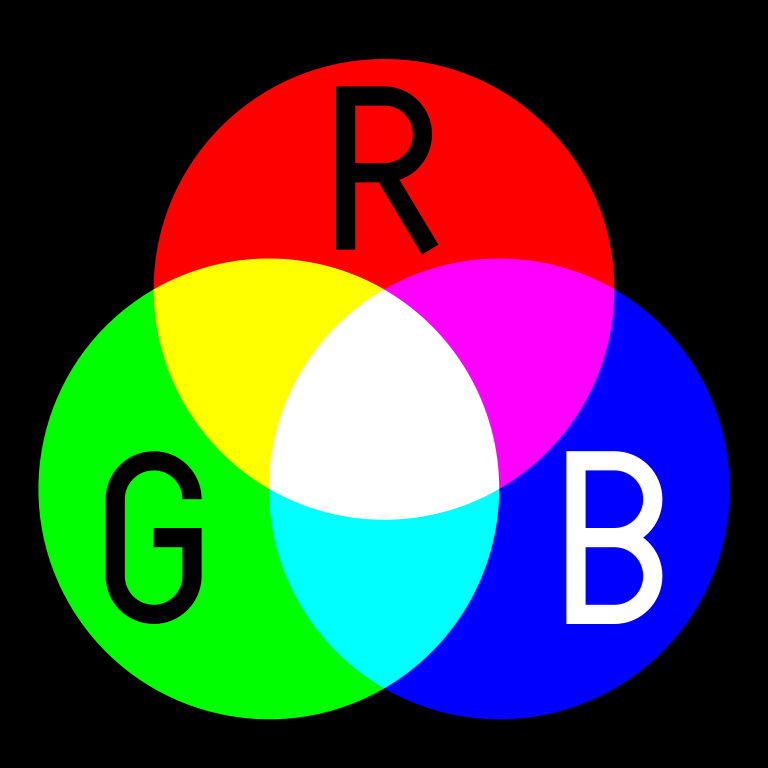

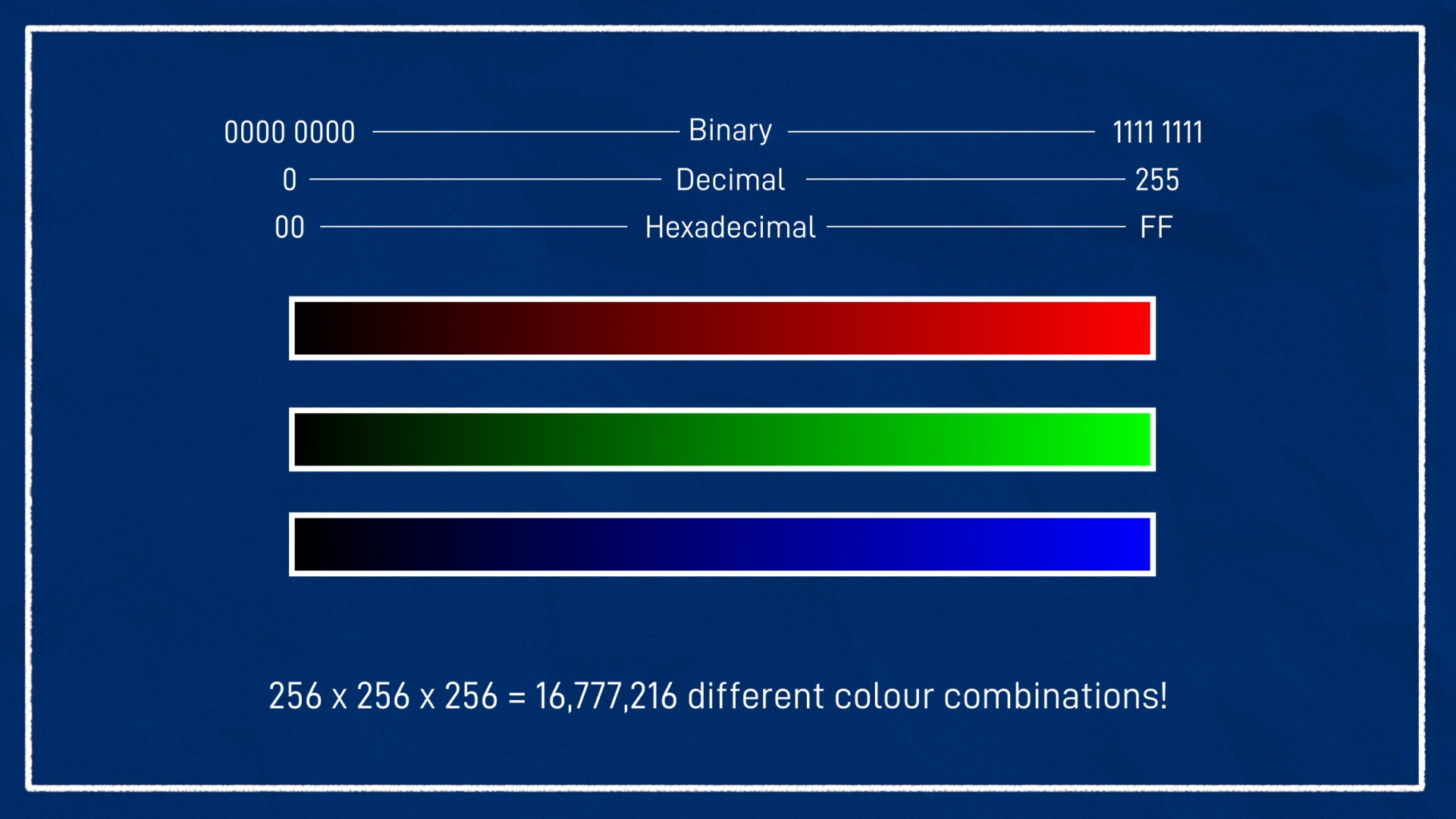

For a grayscale image, this is pretty straightforward - one measurement per pixel. But for colour images it’s a bit more complex. There are a few different methods that can be used, but the most common one on computers is to split the colour information up into three different channels - red, green and blue. By combining different amounts of each of these base colours, we can make pretty much any colour. None of any colour is black, maximum of all three combined is white, and anything in between.

Most commonly, we use 8 bits per colour channel, per pixel, which gives us a range of 256 different values, 0 as a minimum and 255 as a max. And we usually order the three colours as red, green, blue, or RGB.

Sometimes though, you’ll come across other modes. For example, your camera might give you 16 bits per pixel, or you use a library like OpenCV which stores data as BGR. In any case, the overall principle is the same.

Image Compression

This format (3x8-bit values per pixel) is very easy for computers to work with, but isn’t particularly space-efficient, so we often want to utilise compression. This is especially important when we want to send image messages over a network, a common process in robotics.

To save space, we could just resize the image and throw away a bunch of pixels, but formats like JPEG and PNG can be a bit smarter than this, they let us shrink the data size without shrinking the actual image - we don’t lose any pixels. The computer uses some clever algorithms to throw away less important information - for example if there is a big dark patch, rather than storing “0 red, 0 blue, 0 green” for every individual pixel it may be able to group them. PNG does this in a way that no information is lost at all, whereas JPEG is lossy, sometimes pixels that are near each other can blend together a bit.

Focal Length

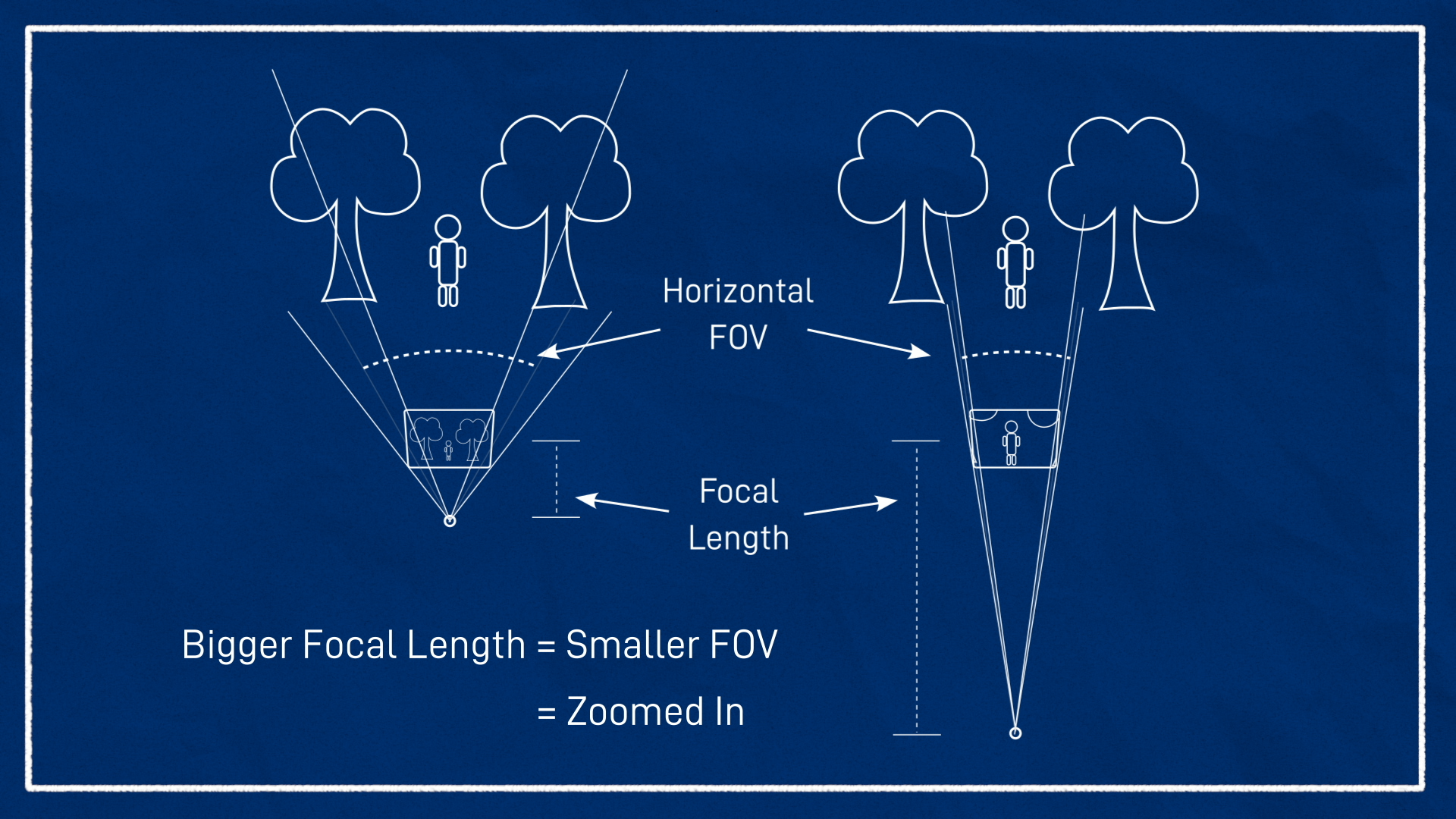

There are many parameters that contribute to the final image of a camera, but one worth having a basic understanding of is focal length. This is how far away the sensor inside the camera is away from the lens, but it’s kind of meaningless without knowing the size of the sensor. Instead it’s often easier to talk about the horizontal field-of-view, the angle spanned from the left side of the picture to the right side.

Then, when we increase the focal length, we decrease the field-of-view, making that angle tighter, which is zooming in. You can see in the image below that with a large focal length it can only see just above the person, rather than all the trees.

Often in robotics, we care about seeing as much as we can around us, and so we use cameras that are zoomed out with a fairly wide-angle field-of-view, or a short focal length.

Also, if you do ever need to perform the conversions, here’s the equation, it’s just some plain trigonometry.

Note that the picture is very much not to scale and is also not quite a proper depiction of the lens-sensor interaction, but hopefully it helps to understand the effect of focal length adjustment. For more detail, check out Wikipedia and the referenced links.

Coordinate systems

The last thing to note briefly is coordinate systems. Typically when working with images, the X direction is from left-to-right, and the Y direction is top-to-bottom. According to our right-hand rule, this would lead to a Z direction into the page (or pointing forward from the camera). This will come up again soon when we look at how ROS deals with things.

Cameras and Images in ROS

Now that we’ve got a general understanding of how images and cameras work, let’s see how they’re used in ROS.

Camera driver node & Image messages

First up, just like the LIDAR in the previous post, we’ll always have a camera driver node. This is a node that is designed to communicate with whatever particular camera hardware you have, and publish the stream of data. It’s common for the driver node to be able to control various aspects of the camera stream, e.g. resolution and frame rate.

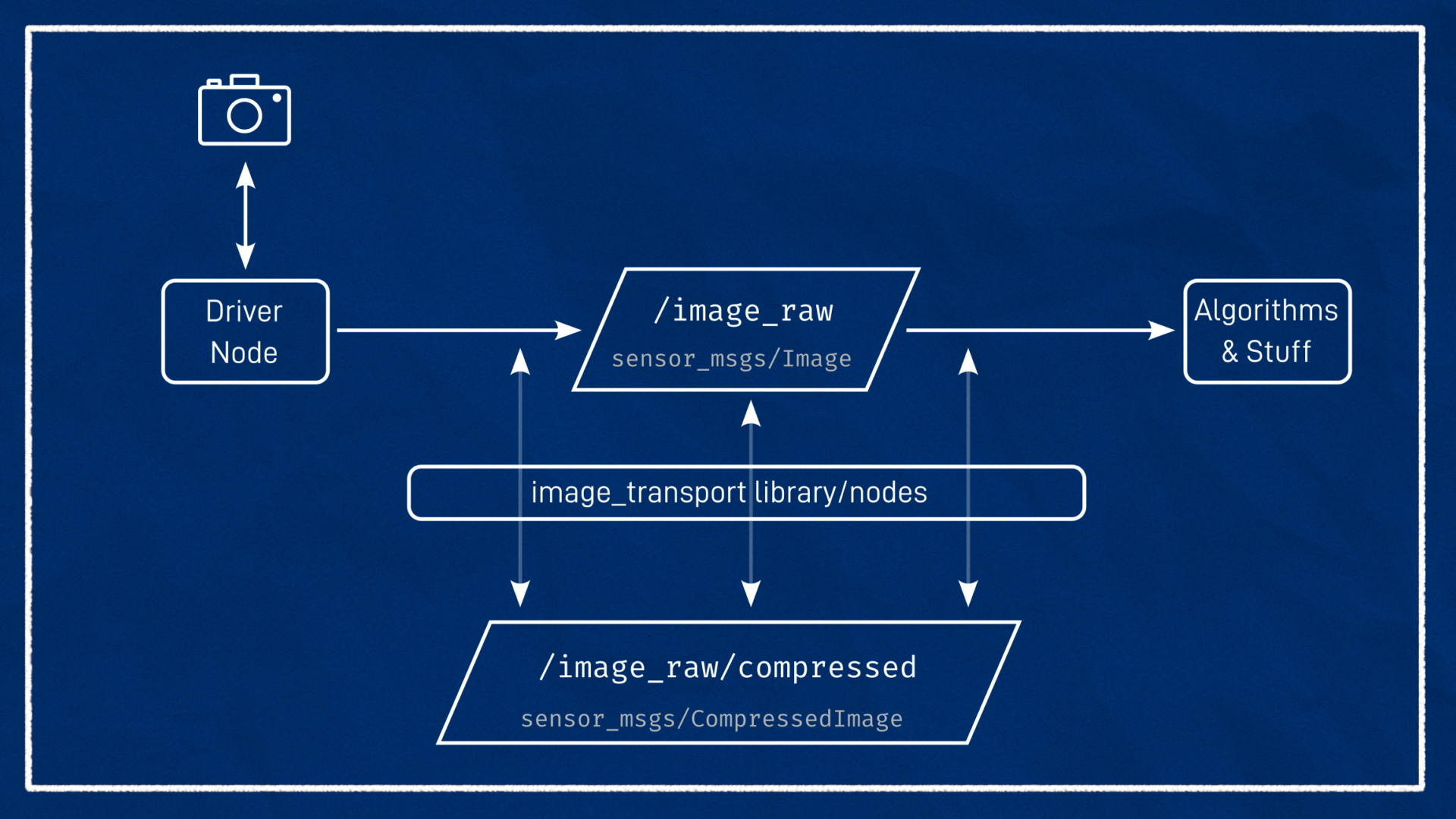

That driver node then takes the data stream coming from the camera, and publishes it to a topic of type sensor_msgs/Image. Again, like we saw with LIDAR, that means that algorithms just need to be written to work with an Image message, and as long as your camera has a driver, it will work, which is great. Note that there is also another type called sensor_msgs/CompressedImage that is designed to work with compressed images.

The unprocessed Image topic published directly by a camera driver is often called /image_raw (to indicate an unprocessed image, not to be confused with the RAW format used in professional photography). If there is a compressed version then it will just add /compressed to the end of the base topic - so in this case it would be /image_raw/compressed.

To assist in dealing with compression, ROS provides the image_transport tools. If nodes are written correctly, image_transport can handle all the compression/decompressions automatically without much trouble. If they aren't (e.g. the drive is only publishing a compressed image), image_transport provides its own node to "fill in the gaps", which we’ll explore later.

Note that Image messages have a Header, which contains the time of the image measurement and also the coordinate frame of the camera that took it.

Camera Info

ROS provides another type called sensor_msgs/CameraInfo. This provides a bunch of metadata about the camera - the lens distortion and so on - so that the data from the image can be correctly interpreted by the algorithms. The topic is typically called /camera_info and is in the same namespace as the Image topic, e.g. /my_camera/image_raw would match to /my_camera/camera_info.

In this tutorial we won’t spend any more time looking at the camera info.

ROS provides the

image_proc/image_pipelinepackages to assist in converting the/image_rawtopic into more useful data, which is often published to topics with names like/image_coloror/image_rect. Again, that's just out of the scope of this tutorial, but you might find theCameraInfodocumentation and ROS 1image_procdocumentation helpful.

Coordinate Frames

One of the most confusing aspects of working with cameras in ROS is the way coordinate frames are used.

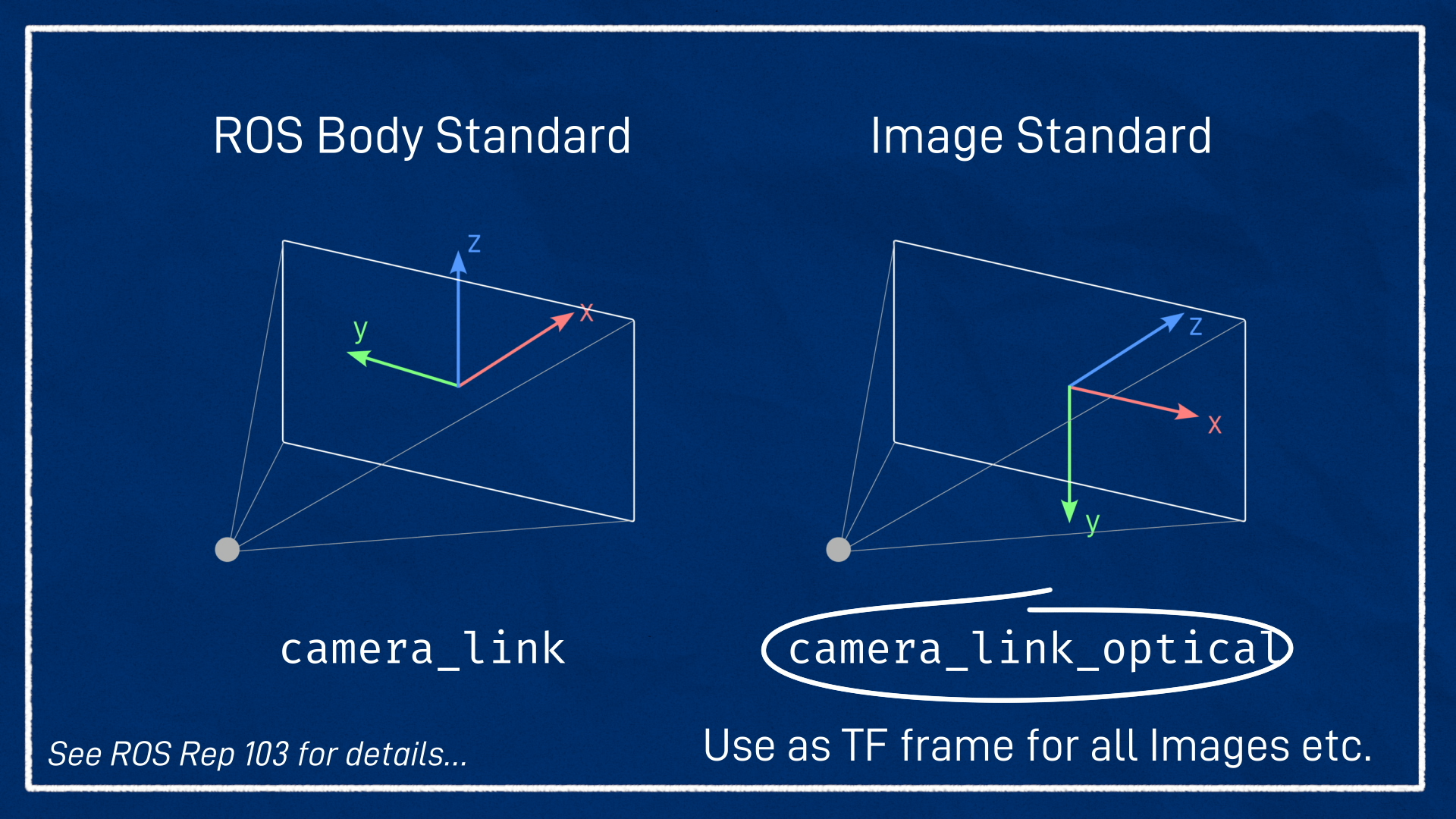

In order for the camera data to be processed correctly it needs to be associated with a coordinate frame - no big deal. But what orientation should the frame be in? We’ve seen in earlier tutorials that in ROS, the standard orientation is to have X forward, Y left, and Z up. However as we saw just above, the standard outside of ROS when working with cameras and images is to have X right, Y down, and Z forward.

To accommodate this, the standard approach is to create two different frames that are located in the same location, one named something like camera_link (following the ROS convention) and the other camera_link_optical (following the standard image convention). The Image and CameraInfo message headers should reference the camera_link_optical frame, but the other link is still there if needed.

We’ll see this in action very soon when we update our URDF.

Simulating a Camera in Gazebo

Just as in the previous tutorial with the LIDAR, we’ll first get a camera simulated and working in Gazebo before we try to connect to a real one. If some of the concepts are being skimmed over too fast, try checking that tutorial for clarification.

Adding a Camera link/joint to our URDF

We'll start off very similar to the lidar (so similar that you could probably copy lidar.xacro and replace a couple of names):

- Create a new file, called

camera.xacroin thedescriptiondirectory - Add the

<robot>tags - Add

<xacro:include filename="camera.xacro" />to ourrobot.urdf.xacro - Create a link called

camera_linkand a joint calledcamera_joint, attached (fixed) to thechassis

For this example, the camera origin will be at the front-centre of the chassis (305mm forward in X and 80mm up in Z). Note that at this point we are still following the ROS convention of "X-forward". I have not added any rotations as my camera is facing directly forward, but if yours is tilted you can add the appropriate rotation.

<joint name="camera_joint" type="fixed">

<parent link="chassis"/>

<child link="camera_link"/>

<origin xyz="0.305 0 0.08" rpy="0 0 0"/>

</joint>

Then we can add a link tag called camera_link, with some visual properties. In this example we'll represent the camera as a small, red, rectangular prism.

<link name="camera_link">

<visual>

<geometry>

<box size="0.010 0.03 0.03"/>

</geometry>

<material name="red"/>

</visual>

</link>

Since my camera is small and fixed to the chassis, I am skipping the collision and inertial parameters. If your camera protrudes from the chassis it should probably have collision, and if it is not on a fixed joint it will need inertia.

And again, remember to add a <gazebo> tag with your material colour.

<gazebo reference="camera_link">

<material>Gazebo/Red</material>

</gazebo>

Add the optical link/joint

Now we need to add the “dummy” link called camera_link_optical mentioned earlier, to account for the different coordinate standards - transforming from the standard ROS robot orientation (x-forward, y-left, z-up) to the standard optical orientation (x-right, y-down, z-forward).

For a camera link called camera_link, the following block achieves this and can be placed anywhere in the file.

I’d usually place it between the

camera_linklink tag and the<gazebo>tag, since it’s kind of a reinterpretation of thecamera_linkrather than truly being its own link.

<joint name="camera_optical_joint" type="fixed">

<origin xyz="0 0 0" rpy="${-pi/2} 0 ${-pi/2}" />

<parent link="camera_link" />

<child link="camera_link_optical" />

</joint>

<link name="camera_link_optical"></link>

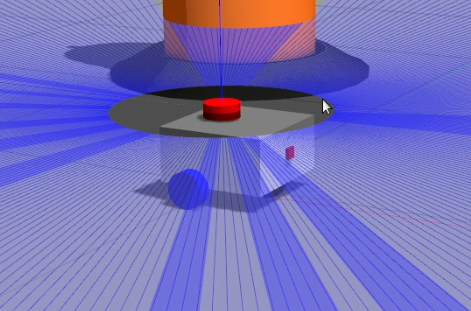

If we were to rebuild and rerun now, we’d see the following (note, at this point you should probably set <visualize> to false for the lidar to avoid those pesky blue lines):

Camera Sensor Simulation

Finally, now that our links and joints are set up we can add our <sensor> tag inside the <gazebo> tag, to tell Gazebo to simulate a camera. Note again that this is the Gazebo tag for camera_link, not the optical one.

This is very similar to the LIDAR in the last tutorial in that we have:

- Things that are common to all sensors

- Parameters specific for the

camerasensor - A ROS plugin

The only changes are the type (and name) of the sensor (it's now a camera), and the filename (and name) of the plugin, which we'll take a closer look at soon.

<sensor name="camera" type="camera">

<pose> 0 0 0 0 0 0 </pose>

<visualize>true</visualize>

<update_rate>10</update_rate>

<camera>

...fill in next section...

</camera>

<plugin name="camera_controller" filename="libgazebo_ros_camera.so">

...fill in next section...

</plugin>

</sensor>

If any of that is confusing, it's worth going back to check out the lidar tutorial. Now let's explore the differences. First is the parameters in the <camera> tag.

I've set the following:

- Horizontal field-of-view is 1.089 radians, to roughly match the Pi camera

- The image is 640x480 pixels, stored as 8-bit RGB

- The clipping planes (distance the camera can see) is set to a minimum of 0.05m and a maximum of 8m

<camera>

<horizontal_fov>1.089</horizontal_fov>

<image>

<format>R8G8B8</format>

<width>640</width>

<height>480</height>

</image>

<clip>

<near>0.05</near>

<far>8.0</far>

</clip>

</camera>

Finally, the <plugin> tag. Once again, this is the way we get data in and out of Gazebo (in this case, to ROS using the libgazebo_ros_camera.so plugin). This one is similar to the lidar but a bit simpler. The only parameter we'll set is the frame_name, but this time we need to set it to camera_link_optical (NOT camera_link). This will ensure the Image topics have their header set correctly.

<plugin name="camera_controller" filename="libgazebo_ros_camera.so">

<frame_name>camera_link_optical</frame_name>

</plugin>

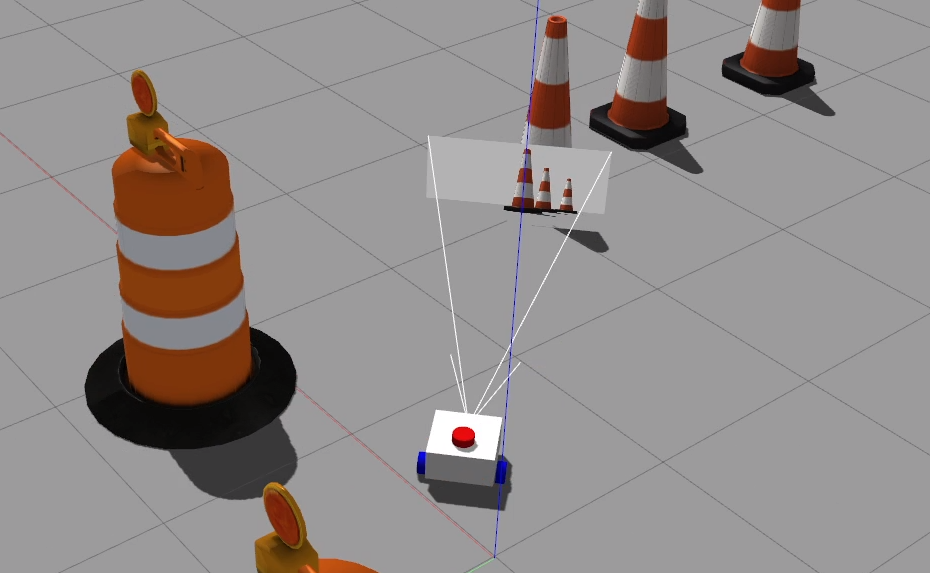

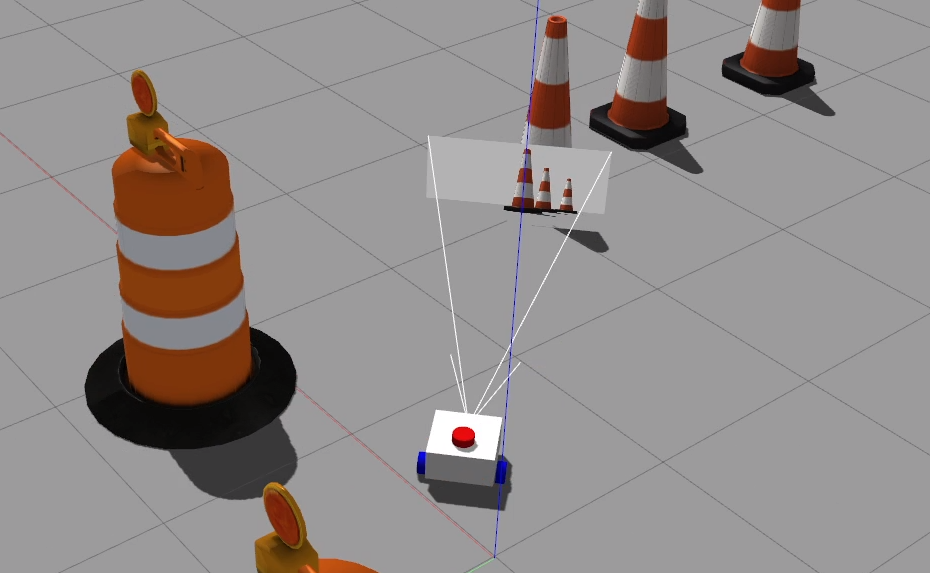

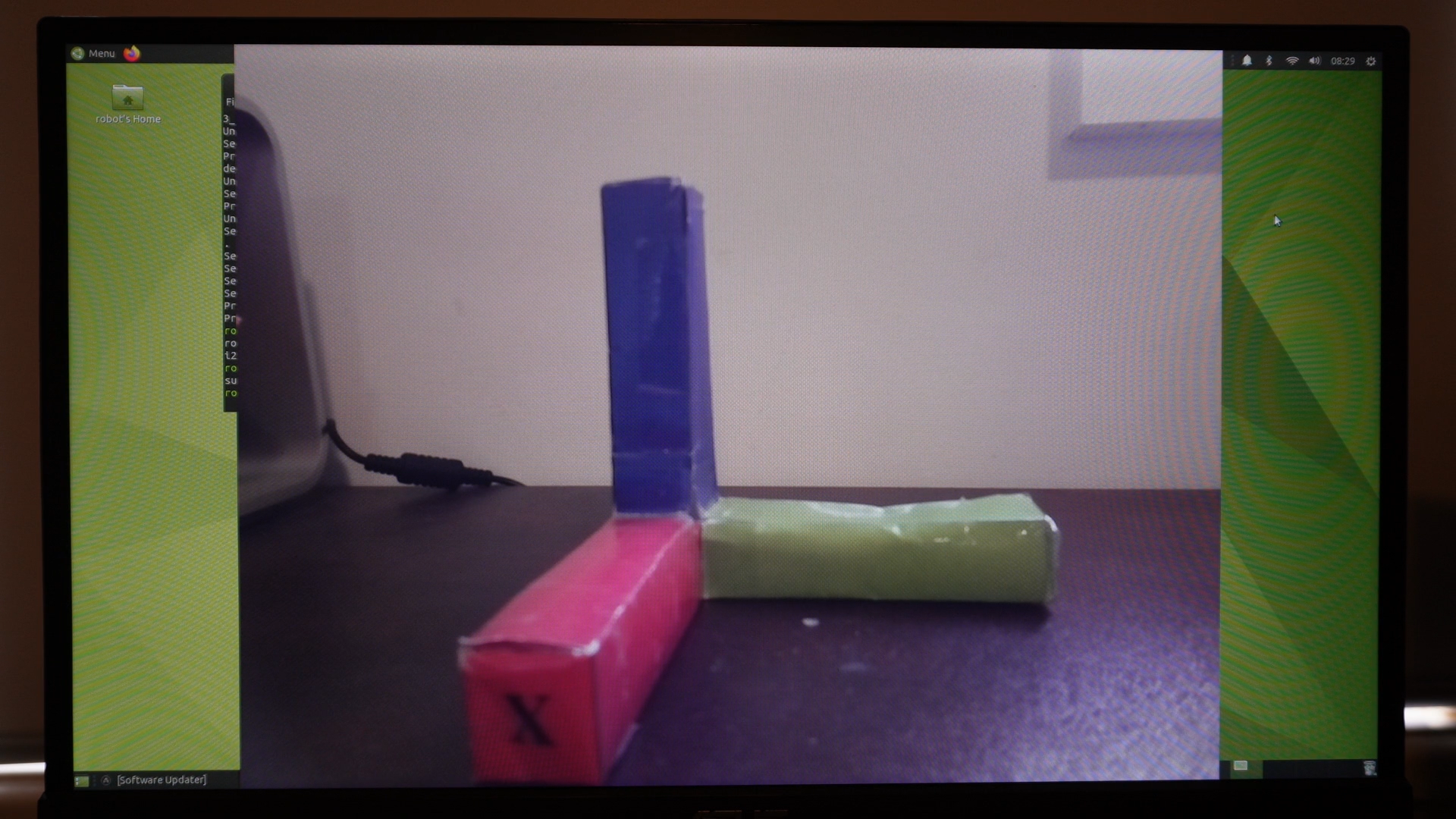

Now, we can relaunch Gazebo and we’ll be simulating a camera! Because we have visualize set to true, we get a little preview of what the camera can see. If we add some objects to the scene, we can see that the camera sees them.

That tells us it’s working inside Gazebo, but how do we know if it’s made it out to the rest of ROS?

Visualising Camera Data

When we want to check if our image data is being published correctly, it’s not usually practical to use ros2 topic echo - we want to actually see the data.

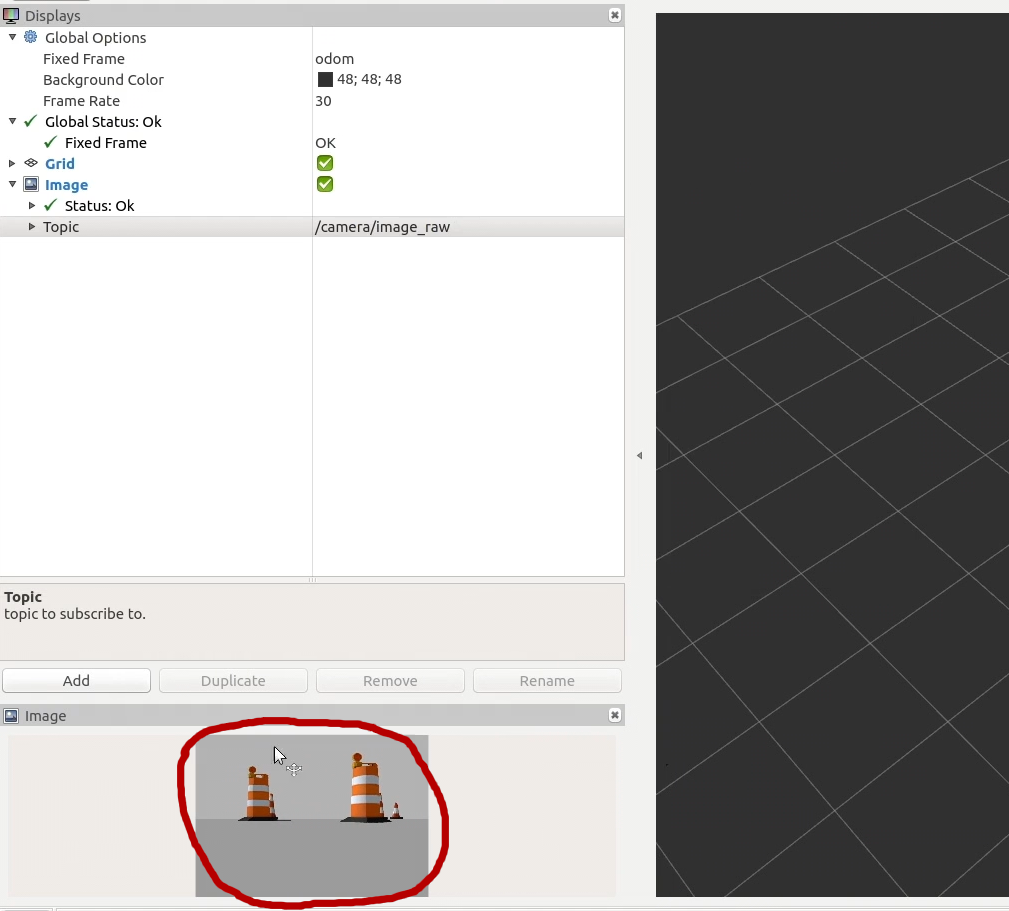

To check that the camera is actually being simulated correctly, start RViz and add an Image section. Set the topic to /camera/image_raw and make sure the Fixed Frame is set to something on your robot (e.g. base_link, or if that doesn't work, camera_link).

Note, I'm sure that in the past I had issues with the QoS not allowing me to see the data. If you are having trouble, try changing the Reliability policy to

Best Effort.

Hopefully you should see a little window into our simulated world!

RViz also offers the Camera display which is similar, but overlays the image onto the 3D view of the world. If we had other things displayed in RViz (e.g. models of other robots) and everything has been calibrated and undistorted correctly, this would let us check it all lines up.

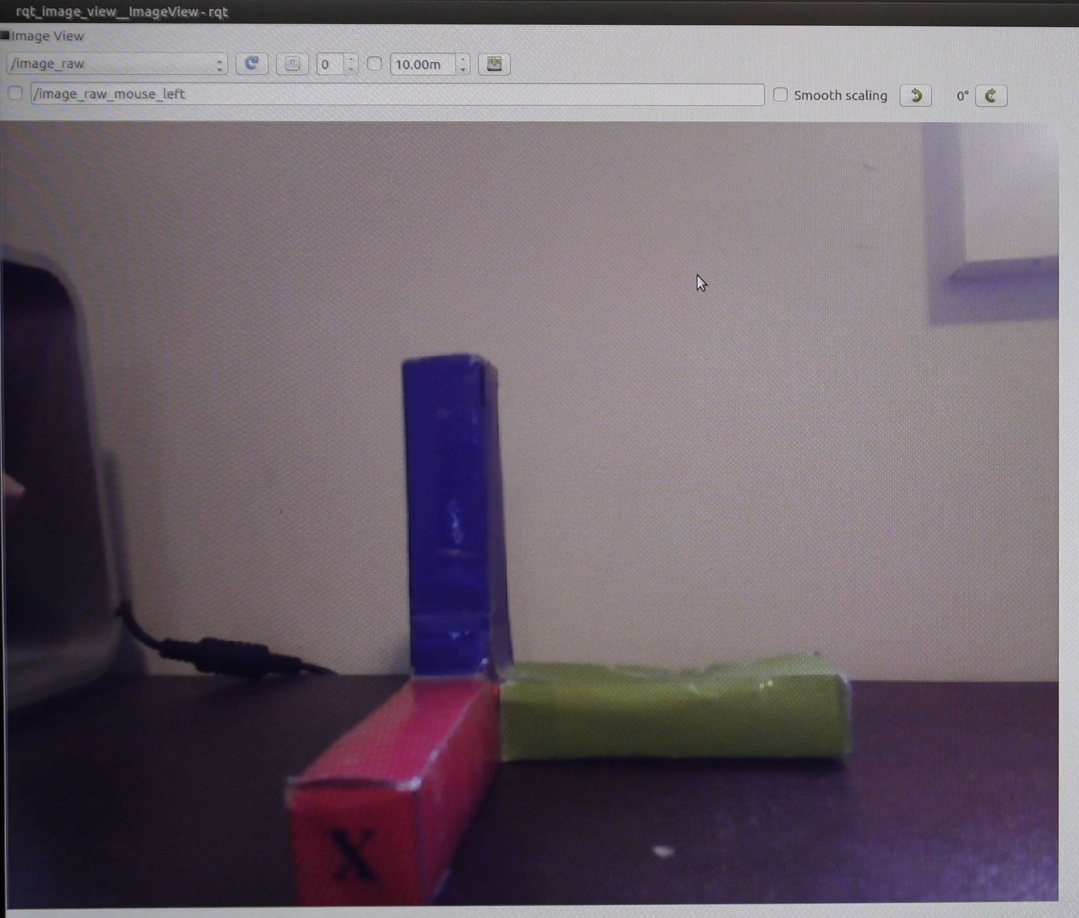

A couple of other nodes you can experiment with for viewing data are image_view and rqt_image_view, which are sometimes a bit less finicky than RViz, especially around transform frames and image compression.

Setting up the real camera

Now that we've got a virtual camera going, we can have a crack at a real camera. For this example I'll be using the Pi Camera V2

Note that the follwing instructions are not the only way to get this going and in fact are probably not the best way as they don't allow full control of the camera (e.g. sensitivity, shutter speed, etc.) it is just a way that worked for me.

Connecting things up

Before we start, we want to install a few packages (you may also want to install rqt_image_view here too):

sudo apt install libraspberrypi-bin v4l-utils ros-foxy-v4l2-camera ros-foxy-image-transport-plugins

Also, use the groups command to confirm you are already in the video group (to allow camera access).

If not, run sudo usermod -aG video $USER (this requires log out/restart to take effect).

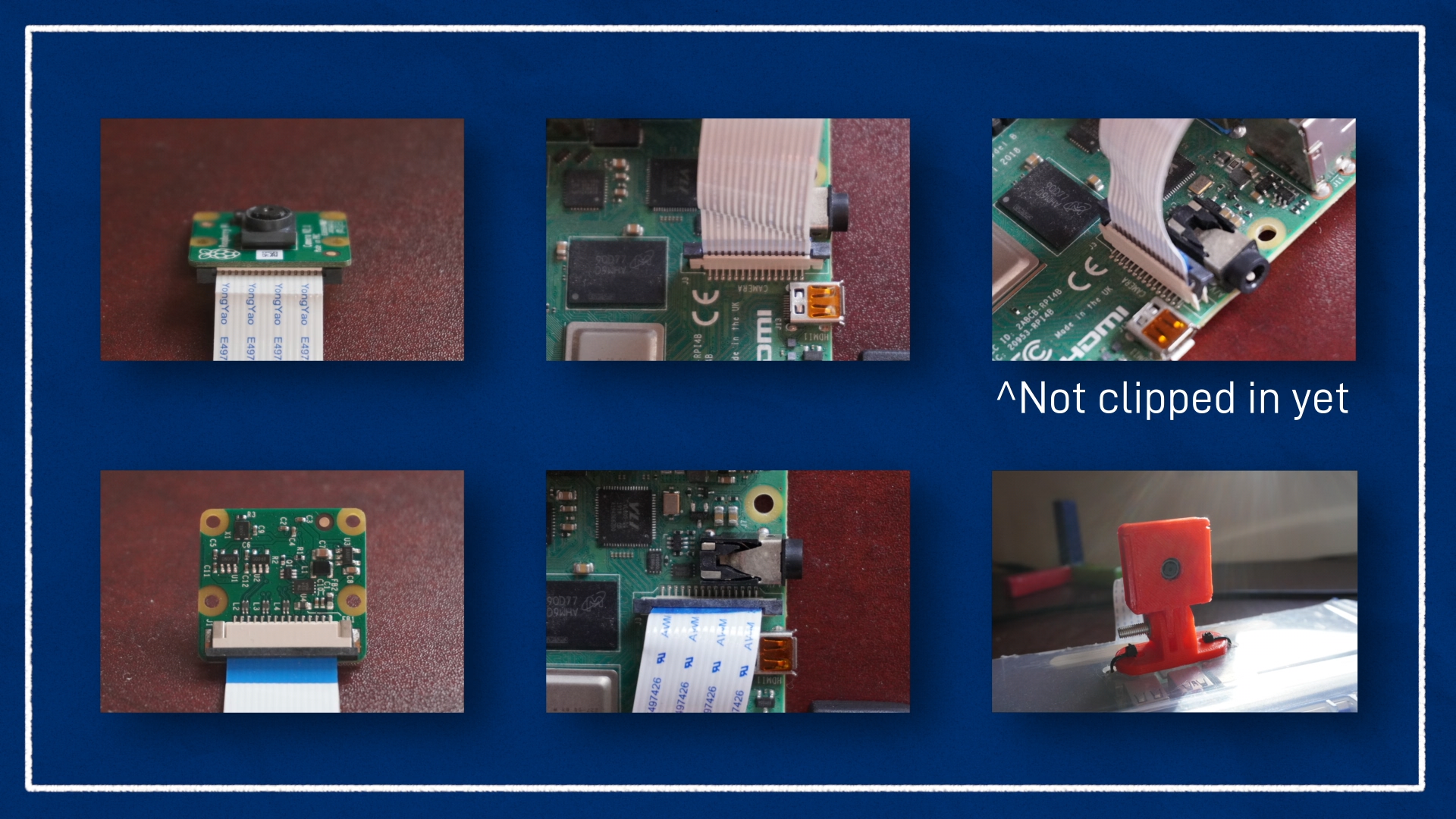

Next, shut down the Pi and plug in the camera. One end of the ribbon cable should go into the port on the board and the other end should go into the camera. Check out the images below to make sure you get the orientation correct (the blue bit on the ribbon should be on the same side as the black clip on the connector).

Now we can turn the Pi back on. First, let's check if it thinks the camera is connected:

vcgencmd get_camera

and we should see supported=1 detected=1 in response.

If we've got a screen connected to the Pi, this is a good opportunity to test that the camera is actually working! We can run raspistill -k and the camera stream should come up on the screen, then press x and Enter to exit.

Then, we can run:

v4l2-ctl --list-devices

And there should be an entry for mmal service, platform bcm2835-v4l2, device /dev/video0. That tells us that the V4L2 subsystem can see the camera, which means we are good to move on!

Running the driver node

To run the V4L2 driver node, use the command below which sets the frame ID correctly (make sure you've closed Gazebo!) and also sets the image size. Note that this driver currently can't restrict frame rate, but that should be coming in a future update. You may also want to remap the topic to match Gazebo.

ros2 run v4l2_camera v4l2_camera_node --ros-args -p image_size:="[640,480]" -p camera_frame_id:=camera_optical_link

Below is an example of the output on /image_raw shown in rqt_image_view.

Launch File

To make things easier we can package it all up into a launch file, as shown below:

Decompressing and republishing image data

As one last note, if we ever need to manually deal with compression/decompression of images, we can first check which plugins image_transport has installed:

ros2 run image_transport list_transports

Then, to republish a topic we need to specify the type of the input, then the type of the output. We also need to remap some topics, which are in the format {in/out}/{type} (with no type for uncompressed/raw). For example, to remap from a compressed input topic to a raw output topic we use:

ros2 run image_transport republish compressed raw --ros-args -r in/compressed:=/camera/image_raw/compressed -r out:=/camera/my_uncompressed_image

Note, with

image_transport,rawmeans "uncompressed" and has nothing to do with the "raw" inimage_raw.

Conclusion

Now we've got a handle on how cameras and images work in ROS, we can simulate a camera in Gazebo, and connect to a real camera. In the next post, we'll keep looking at cameras, but specifically depth cameras which also return a distance to each pixel.